The Agentic Shift: Why Reliable AI Depends on Architecture, Not Bigger Models

The artificial intelligence landscape is undergoing a fundamental transition. We are moving from stateless, prompt-based interactions to stateful, autonomous agentic systems. This evolution, often termed the Agentic Shift, represents a departure from viewing Large Language Models (LLMs) as solitary oracles to treating them as cognitive engines within broader, engineered systems.

The Limitations of Single-Turn AI

For the past several years, the dominant interaction model with Generative AI has been the single-turn or stateless exchange. In this paradigm, a user provides a prompt, and the model returns a completion. While effective for summarization, creative writing, and basic code generation, this approach hits a glass ceiling of complexity when applied to enterprise-grade problem solving.

Single-turn LLM calls are inherently brittle. They rely heavily on the user's ability to provide perfect context in a single window and the model's ability to reason without intermediate verification. When a single LLM attempts to solve a multifaceted problem, it often fails to maintain coherence across sub-tasks or hallucinates details due to context dilution.

The core problem driving the industry today is that while LLMs are reasoning engines, they are not autonomous agents by default. They lack episodic memory, the ability to retain state across distinct actions. They lack self-correction, the capacity to recognize an error in output and iteratively refine it. And they struggle with tool orchestration, the dynamic ability to select, execute, and validate inputs from external software tools.

From Workflows to Agents

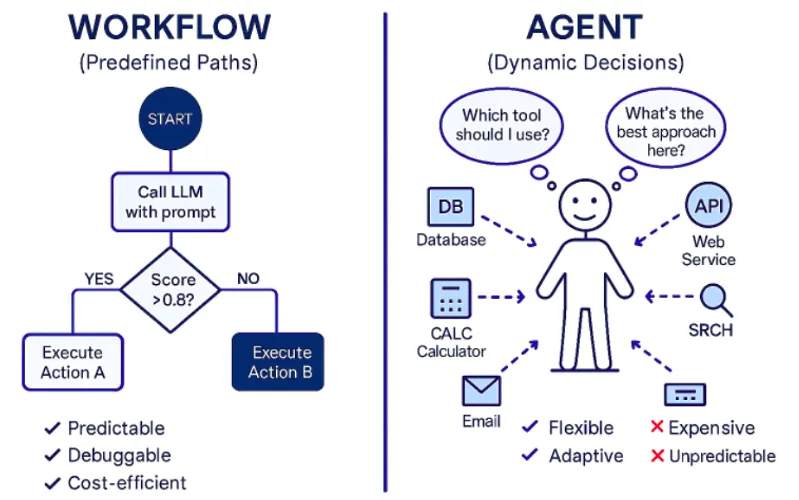

The industry is shifting from Workflows to Agents. It is critical to distinguish between these two concepts. Workflows operate on predefined code paths where control flow moves from step A to B to C in a rigid, prescriptive manner. They break when inputs deviate from expectations, and the LLM serves merely as a processor within the structure.

Agents, by contrast, feature dynamic decision-making by the LLM itself. They are adaptive, replanning based on context. The LLM becomes the director of the process rather than just a component within it. This makes agents ideal for complex, ambiguous problem solving where the path forward cannot be predetermined.

Modern architectures are adopting compound AI systems where autonomy is bounded by reliability patterns. This shift mirrors the transition in distributed computing from monolithic applications to microservices, where specialized components communicate to achieve a larger goal.

Systems Engineering as the Bedrock of AI

The central thesis emerging from industry research is that the magic of AI agents arises not from the intelligence of the model itself, but from the systems engineering architecture that surrounds it. Reliability in AI will not come from a better model. It will come from the implementation of control theory principles into the cognitive workflow.

Specifically, we are moving from a probabilistic era of AI, where we hope the model gets it right, to a deterministic wrapper era, where systems ensure that the probabilistic output is verified, critiqued, and refined before execution. This requires feedback loops, state machines, and evaluator-optimizer patterns.

This thesis aligns with the academic focus of institutions like Stevens Institute of Technology, where the convergence of Systems Engineering through the Systems Engineering Research Center (SERC) and Artificial Intelligence through the Stevens Institute for Artificial Intelligence (SIAI) creates the necessary theoretical framework for managing complex, adaptive systems.

Looking Forward

The future belongs to AI Engineers who are, in reality, Systems Architects capable of designing the constraints and channels through which cognitive energy flows. The Agentic Shift of 2026 represents the maturation of Generative AI. We are moving past the novelty of chatting with a bot to the engineering reality of orchestrating a system.

For engineering leaders and architects aiming to build resilient AI infrastructures, the message is clear: invest in architecture, not just model size. The technology is ready. The challenge now is building the systems that make it reliable.